Reverse engineering undocumented, defective API: Interpol

August 30, 2021 // bugs , dev , language , projects , python , asyncio , scraping

Filed under dev

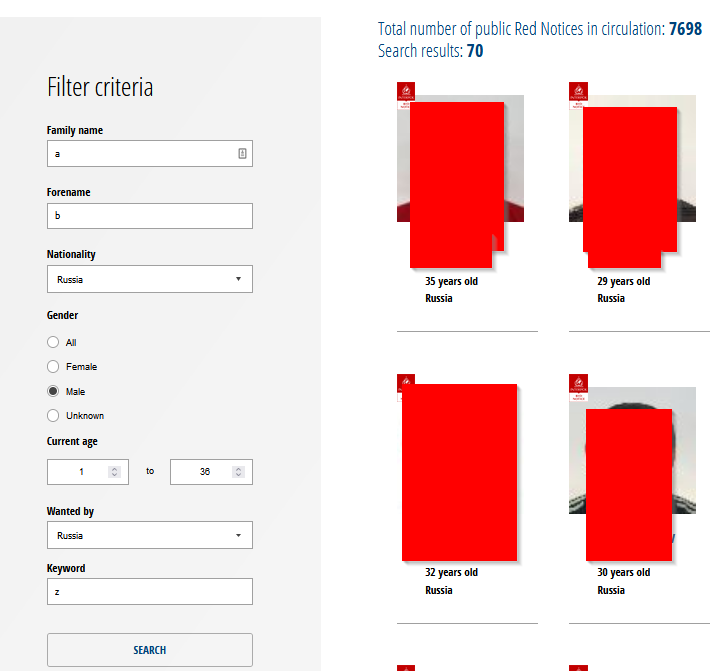

Some time ago I was interested in scraping data from Interpol Red Notices to see if there was some interesting pattern, also it was a great chance to learn asyncio.

In the process, I struggled with how broken their API was, so much that I cared enough to document it.

The API

After looking at the network requests in developer tools of the browser, it’s clear that there’s a REST API behind, which should make things easier for the scraping, right? Not really, more on that later.

The site lets you filter at least with these parameters

The request above can be made in curl this way:

curl "https://ws-public.interpol.int/notices/v1/red?&name=a&forename=b&nationality=RU&sexId=M&ageMin=1&ageMax=36&arrestWarrantCountryId=RU&freeText=z"

{

"total": 1,

"query": {

"page": 1,

"resultPerPage": 20,

"name": "a",

"forename": "b",

"nationality": "RU",

"freeText": "z",

"ageMin": 1,

"ageMax": 36,

"sexId": "M",

"arrestWarrantCountryId": "RU"

},

"_embedded": {

"notices": [

{

"forename": "FAKIUS",

"date_of_birth": "1989/06/24",

"entity_id": "2010/52486",

"nationalities": [

"CA",

],

"name": "LASTNAMESON",

"_links": {

"self": {

"href": "https://ws-public.interpol.int/notices/v1/red/2021-45643"

},

"images": {

"href": "https://ws-public.interpol.int/notices/v1/red/2021-45643/images"

},

"thumbnail": {

"href": "https://ws-public.interpol.int/notices/v1/red/2021-45643/images/62195643"

}

}

}

]

}

}

So a priori, we just need to paginate over all the results and we have all data.

We can, with this information, request the detail for each of the entries:

curl "https://ws-public.interpol.int/notices/v1/red/2010-52486"

{

"arrest_warrants": [

{

"issuing_country_id": "CA",

"charge": "JSON forgery",

"charge_translation": null

}

],

"weight": 68,

"forename": "JOHN",

"date_of_birth": "1989/06/24",

"entity_id": "2010-52486",

"languages_spoken_ids": [

"ENG",

"SOM"

],

"nationalities": [

"CA",

"SO"

],

"height": 1.89,

"sex_id": "M",

"country_of_birth_id": null,

"name": "LASTNAMESON",

"distinguishing_marks": null,

"eyes_colors_id": [

"BRO"

],

"hairs_id": [

"BLA"

],

"place_of_birth": null,

"_embedded": {

"links": []

},

"_links": {

"self": {

"href": "https://ws-public.interpol.int/notices/v1/red/2010-52486"

},

"images": {

"href": "https://ws-public.interpol.int/notices/v1/red/2010-52486/images"

},

"thumbnail": {

"href": "https://ws-public.interpol.int/notices/v1/red/2010-52486/images/123123123"

}

}

}

The API: shortcomings

Very quickly I discover that it ain’t that easy.

Firstly, you can’t paginate past 160 results. So for any given query, you can only obtain the latest 160 hits (they’re always in reverse chronological order), no matter the values for the resultsPerPage or page parameters. So the scraping will have to use search terms that give us 160 results or fewer, otherwise we miss older notices. This means obtaining the latest results is easy, but scraping the bottom of the bucket might suppose a problem.

Also, while most text fields support regexes (yay!), the way they work is… unreliable. For example any string with literal \. (not wildcard .) plus any other literal regex character (\* for example) in the same string would cause a 504 errors, so what seems to be a backend bug is effectively blocking naive/brute force attempts to access all data.

While testing requests to see if I could find some hidden field, or ordering by certain value, I found that:

curl 'https://ws-public.interpol.int/notices/v1/red?&page=90&name.dir="desc"'

would cause:

{

"name": "CastError",

"message": "Cast to string failed for value \"{ dir: '\"desc\"' }\" at path \"name\" for model \"Red\""

}

Which is a Mongoose error. Now we know there’s node.js in the backend!

Another problem I hit was that my script was just way too fast. Coroutines in Python go a long way, and without noticing I was reaching roughly ~1000 reqs/s barely denting my CPU usage. Their API is behind Akamai, so I started getting 503 errors pretty quickly, and Akamai blocked me for about 5-10 minutes each time.

To overcome that in an asyncio way, I wrote the following class:

class SpeedSemaphore():

def __init__(self,BaseClass=BoundedSemaphore,value=1,rate=1):

self.semaphore = BaseClass(value=value)

self.task = asyncio.create_task(self.requestRateLimiter(rate))

self.lock = Lock()

self._deleted = False

async def acquire(self):

await self.semaphore.acquire()

await self.lock.acquire()

def release(self):

self.semaphore.release()

def locked(self):

return self.semaphore.locked() or self.lock.locked()

async def __aenter__(self):

await self.semaphore.acquire()

await self.lock.acquire()

async def __aexit__(self,exc_type,exc,tb):

self.semaphore.release()

def __del__(self):

self._deleted = True

asyncio.run(self._await())

async def _await(self):

await self.task

async def requestRateLimiter(self,rate):

while not self._deleted:

if self.lock.locked():

self.lock.release()

await asyncio.sleep(1/rate)

It’s both a semaphore and a speed limiter. That limits both the number of concurrent connections via its value parameter, plus the number of requests per second via rate. The interesting point is how the async method requestRateLimiter releases the lock at the desired rate, and we make sure this method is awaited on destruction to prevent the dreaded coroutine was never awaited errors. Of course if there are too many coroutines, or they use significant amount of CPU, the rate will be lower than the one requested, but I’m fine for now with such shortcomings.

Fields of interest

The actual documents in the database have a plethora of fields, and some can be queried through the webservice, here I cover those that are of interest for the scraping.

name

Matches the last name of the suspect. It supports regular expressions. Suspect without a known last name will never get matched if the field is used.

forename

Matches the first name of the suspect. It supports regular expressions. Suspect without a known first name will never get matched if the field is used.

ageMax and ageMin

Respectively max and min age of the suspect. Some suspects don’t have a known age, so likewise, when specifying this parameter they won’t appear among the results.

arrestWarrantCountryId

This is the country (or international organization, like the International Criminal Court) that issued the notice. The good thing about this one is that it’s always present: even if the suspect has no known identity, no date of birth, or nationality (e.g. just a picture cases) their notice is always put there by some Interpol member. The bad thing is that most requests using it seem to timeout, making me think there’s some full table scan or some other performance shenanigans behind.

From my script:

Bad reply https://ws-public.interpol.int/notices/v1/red?&resultPerPage=160&arrestWarrantCountryId=DJ waiting 60s status 504

Bad reply https://ws-public.interpol.int/notices/v1/red?&resultPerPage=160&arrestWarrantCountryId=AD waiting 60s status 504

Bad reply https://ws-public.interpol.int/notices/v1/red?&resultPerPage=160&arrestWarrantCountryId=AO waiting 60s status 504

Bad reply https://ws-public.interpol.int/notices/v1/red?&resultPerPage=160&arrestWarrantCountryId=AF waiting 60s status 504

Bad reply https://ws-public.interpol.int/notices/v1/red?&resultPerPage=160&arrestWarrantCountryId=AL waiting 60s status 504

Bad reply https://ws-public.interpol.int/notices/v1/red?&resultPerPage=160&arrestWarrantCountryId=AR waiting 60s status 504

Bad reply https://ws-public.interpol.int/notices/v1/red?&resultPerPage=160&arrestWarrantCountryId=CG waiting 60s status 504

Bad reply https://ws-public.interpol.int/notices/v1/red?&resultPerPage=160&arrestWarrantCountryId=AM waiting 60s status 504

Bad reply https://ws-public.interpol.int/notices/v1/red?&resultPerPage=160&arrestWarrantCountryId=CL waiting 60s status 504

Bad reply https://ws-public.interpol.int/notices/v1/red?&resultPerPage=160&arrestWarrantCountryId=DO waiting 60s status 504

Bad reply https://ws-public.interpol.int/notices/v1/red?&resultPerPage=160&arrestWarrantCountryId=AT waiting 60s status 504

Bad reply https://ws-public.interpol.int/notices/v1/red?&resultPerPage=160&arrestWarrantCountryId=AZ waiting 60s status 504

Bad reply https://ws-public.interpol.int/notices/v1/red?&resultPerPage=160&arrestWarrantCountryId=CR waiting 60s status 504

Bad reply https://ws-public.interpol.int/notices/v1/red?&resultPerPage=160&arrestWarrantCountryId=BD waiting 60s status 504

Bad reply https://ws-public.interpol.int/notices/v1/red?&resultPerPage=160&arrestWarrantCountryId=HR waiting 60s status 504

Bad reply https://ws-public.interpol.int/notices/v1/red?&resultPerPage=160&arrestWarrantCountryId=BZ waiting 60s status 504

Bad reply https://ws-public.interpol.int/notices/v1/red?&resultPerPage=160&arrestWarrantCountryId=BO waiting 60s status 504

Bad reply https://ws-public.interpol.int/notices/v1/red?&resultPerPage=160&arrestWarrantCountryId=CO waiting 60s status 504

Bad reply https://ws-public.interpol.int/notices/v1/red?&resultPerPage=160&arrestWarrantCountryId=BA waiting 60s status 504

Bad reply https://ws-public.interpol.int/notices/v1/red?&resultPerPage=160&arrestWarrantCountryId=EC waiting 60s status 504

Bad reply https://ws-public.interpol.int/notices/v1/red?&resultPerPage=160&arrestWarrantCountryId=BR waiting 60s status 504

Bad reply https://ws-public.interpol.int/notices/v1/red?&resultPerPage=160&arrestWarrantCountryId=KH waiting 60s status 504

Bad reply https://ws-public.interpol.int/notices/v1/red?&resultPerPage=160&arrestWarrantCountryId=CM waiting 60s status 504

This approach slows things so much that even though I left the option to do per country filtering, it’s turned off since it really makes their service grind to a halt.

freeText

This is a mysterious field. It seems to match:

- Gender: F,M,U. It can be queried on its own, but still.

- The charges pressed as well as the translation. Searching for murder will find people charged with murder.

- Country: For example, SV finds suspects whose nationality is El Salvador, or wanted by El Salvador, or whose country of birth is El Salvador, or combinations of them (haven’t verified because those 3 fields usually match).

- Language: SPA matches Spanish speakers.

- Others: I don’t have examples now, but in some tests I found some strings to match something that isn’t available in any of the public fields.

Fields of non-interest

- nationality: while relatively selective, it’s usually that of the issuing country. Some suspects have no known nationality and are left out.

- sexId: not very selective, most wanted are men.

Solution

The solution isn’t pretty but after reviewing the results, it works: it’s a naive/brute force search independently for the following fields name, forename and freeText.

By bruteforce I mean using the regex ^A.*B$, where A and B are increasingly expanded inwards if matches surpass 160 hits. Why that, when ^A.*$ could work? Well, for the warrant text it seemed to drag too long for common expressions (organization, participation, etc). With the other approach, at least we can detect earlier cases of copy-paste (when start and end match it’s usually a copypaste and not just a common charge).

That way, I assumed that when the length of A and B starts being too long (I used 45 chars, combined, as heuristic), it means we matched freeText with a copy-pasted warrant (I’m looking at you, Russia). Then, and only then, I start splitting the request in slices of age to narrow down all the notices. Otherwise bruteforce just throws out thousands of requests to keep finding the, exact, same, piece, of, text, without getting anywhere close to the 160 hit API limit.

Stats as of 2021/09/02:

- Total 7699

- With a last name: 7693 (6 unknown)

- With a first name: 7681 (18 unknown)

- With an age: 7689 (10 unknown)

- Found: 7666 (33 missing)

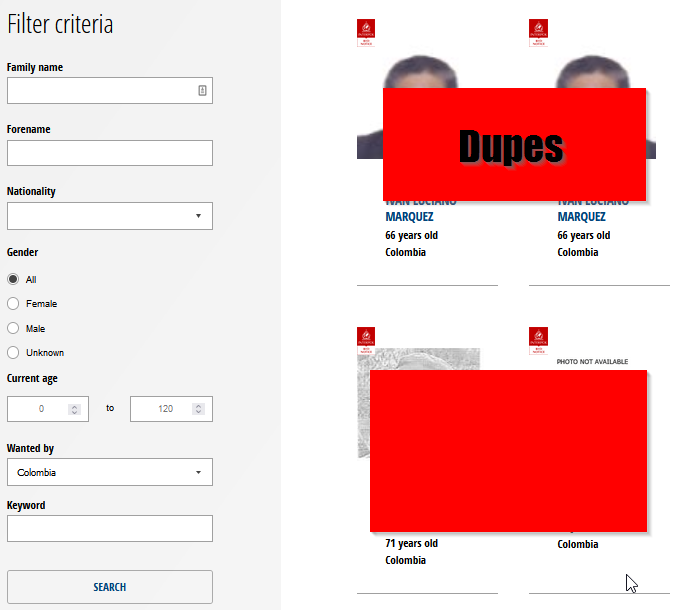

Do I miss that many? Hard to tell, just look:

Same name, pictures, and notice ID.

So I added some duplicate verification code in the scraping, and the results were:

WARNING: notice 2002-25285 found 2 times

WARNING: notice 2002-25297 found 2 times

WARNING: notice 2002-44137 found 2 times

WARNING: notice 2005-30404 found 2 times

WARNING: notice 2005-56122 found 2 times

WARNING: notice 2007-24209 found 2 times

WARNING: notice 2009-24472 found 2 times

WARNING: notice 2010-43947 found 2 times

WARNING: notice 2010-43964 found 2 times

WARNING: notice 2010-44010 found 2 times

WARNING: notice 2011-20073 found 2 times

WARNING: notice 2011-21121 found 2 times

WARNING: notice 2013-57674 found 3 times

WARNING: notice 2014-8644 found 2 times

WARNING: notice 2015-52561 found 2 times

WARNING: notice 2016-74800 found 2 times

WARNING: notice 2017-130054 found 3 times

WARNING: notice 2017-131907 found 2 times

WARNING: notice 2017-191597 found 2 times

WARNING: notice 2017-192033 found 2 times

WARNING: notice 2018-14932 found 2 times

WARNING: notice 2018-55088 found 3 times

WARNING: notice 2018-5937 found 2 times

WARNING: notice 2018-89732 found 2 times

WARNING: notice 2019-112618 found 2 times

WARNING: notice 2019-20349 found 2 times

WARNING: notice 2019-77515 found 2 times

WARNING: notice 2019-86239 found 2 times

WARNING: notice 2019-89223 found 2 times

WARNING: notice 2019-89678 found 2 times

WARNING: notice 2020-56563 found 2 times

30 dupes, plus 3 found once again each, equals 33, exactly those missing from my scraping and the total. In fact I found them all. Sigh.

Posting to Facebook

I don’t have immediate need for this, but it’s nice to be able to track notices that shortly after disappear, like 2010/52486. So just for fun I added FB posting functionality, here’s the little resulting bot: Interpol Red Notice Bot.